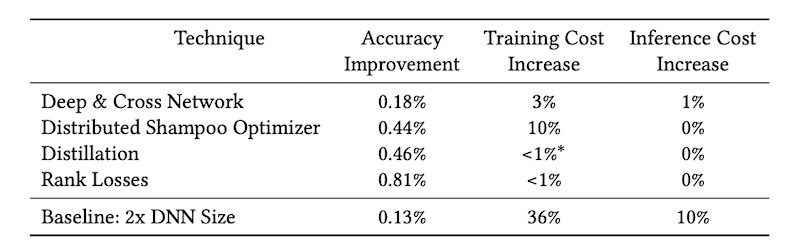

Weights & Biases on X: "Distributed Shampoo is a second-order optimization method that makes training large models much faster 🏃♀️ @borisdayma and @_arohan_ show that it trains up to 10x faster than

2023 New Hair Building Fiber Powder Hair Loss Product Regrowth Treatment Dense Hair Growth Bald Hairline Sparse Filler Coverage Optimizer Chang Zhao | Fruugo FR

A Distributed Data-Parallel PyTorch Implementation of the Distributed Shampoo Optimizer for Training Neural Networks At-Scale

Boris Dayma 🖍️ on X: "We ran a grid search on each optimizer to find best learning rate. In addition to training faster, Distributed Shampoo proved to be better on a large

A Distributed Data-Parallel PyTorch Implementation of the Distributed Shampoo Optimizer for Training Neural Networks At-Scale

Rohan Anil on X: "Code for Distributed Shampoo: a scalable second order optimization method https://t.co/jzfkM3SOPN 💥 Joint work w @GuptaVineetG State of the art on MLPerf ResNet-50 training to reach 75.9% accuracy

Dheevatsa Mudigere on LinkedIn: Very happy to see this work published - “Distributed Shampoo optimizer for…

A Distributed Data-Parallel PyTorch Implementation of the Distributed Shampoo Optimizer for Training Neural Networks At-Scale

A Distributed Data-Parallel PyTorch Implementation of the Distributed Shampoo Optimizer for Training Neural Networks At-Scale | DeepAI

AI Summary: A Distributed Data-Parallel PyTorch Implementation of the Distributed Shampoo Optimizer for Training Neural Networks At-Scale

![JAX Meetup: Scalable second order optimization for deep learning [ft. Rohan Anil] - YouTube JAX Meetup: Scalable second order optimization for deep learning [ft. Rohan Anil] - YouTube](https://i.ytimg.com/vi/1ZbQSrC0fBc/hq720.jpg?sqp=-oaymwE7CK4FEIIDSFryq4qpAy0IARUAAAAAGAElAADIQj0AgKJD8AEB-AH-CYAC0AWKAgwIABABGGAgYChgMA8=&rs=AOn4CLBHDmLiehuxYASwNgQHFIGwutLnUQ)